Motion Artefact Detection and Correction

This notebook shows how to identify and correct motion-artefacts using xarray-based masks and cedalion’s correction functionality.

[1]:

# This cells setups the environment when executed in Google Colab.

try:

import google.colab

!curl -s https://raw.githubusercontent.com/ibs-lab/cedalion/dev/scripts/colab_setup.py -o colab_setup.py

# Select branch with --branch "branch name" (default is "dev")

%run colab_setup.py

except ImportError:

pass

[2]:

import matplotlib.pyplot as p

import cedalion

import cedalion.data as datasets

import cedalion.nirs

import cedalion.sigproc.motion as motion

import cedalion.sigproc.quality as quality

import cedalion.sim.synthetic_artifact as synthetic_artifact

import cedalion.vis.blocks as vbx

from cedalion import units

import numpy as np

np.random.seed(123)

[3]:

# get example finger tapping dataset

rec = datasets.get_fingertapping()

# for this example restrict the notebook to 500s.

rec["amp"] = rec["amp"].sel(time=slice(0,500)).pint.quantify(units.V)

rec["od"] = cedalion.nirs.cw.int2od(rec["amp"])

rec["od_orig"] = rec["od"].copy()

# Add some synthetic spikes and baseline shifts

artifacts = {

"spike": synthetic_artifact.gen_spike,

"bl_shift": synthetic_artifact.gen_bl_shift,

}

timing = synthetic_artifact.random_events_perc(rec["od"].time, 0.01, ["spike"])

timing = synthetic_artifact.add_event_timing(

[(200, 0), (400, 0)], "bl_shift", None, timing

)

rec["od"] = synthetic_artifact.add_artifacts(rec["od"], timing, artifacts)

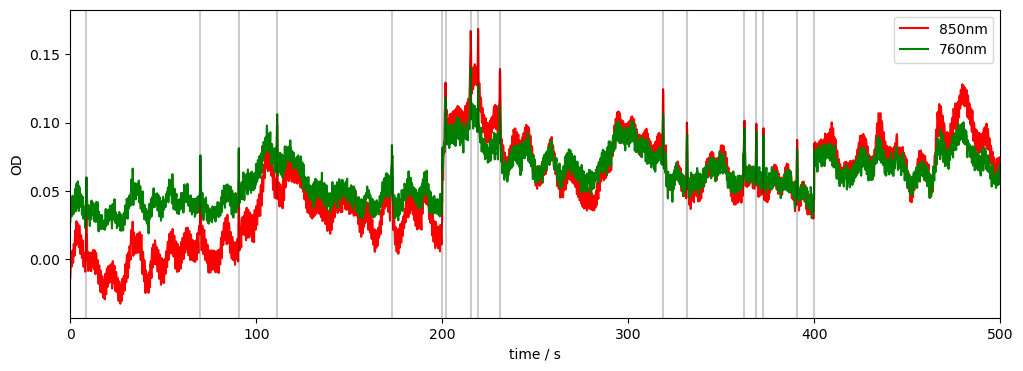

# Plot some data for visual validation

f, ax = p.subplots(1, 1, figsize=(12, 4))

ax.plot(

rec["od_orig"].time, rec["od_orig"].sel(channel="S3D3", wavelength="760"), "g-", label="760nm original", alpha=.3

)

ax.plot(

rec["od_orig"].time, rec["od_orig"].sel(channel="S3D3", wavelength="850"), "r-", label="850nm original", alpha=.3

)

ax.plot(

rec["od"].time, rec["od"].sel(channel="S3D3", wavelength="760"), "g-", label="760nm"

)

ax.plot(

rec["od"].time, rec["od"].sel(channel="S3D3", wavelength="850"), "r-", label="850nm"

)

# indicate added artefacts

for _,row in timing.iterrows():

p.axvline(row["onset"], c="k", alpha=.2)

p.legend()

ax.set_xlabel("time / s")

ax.set_ylabel("OD")

display(rec["od"])

<xarray.DataArray (channel: 28, wavelength: 2, time: 3907)> Size: 2MB

<Quantity([[[-0.01054937 -0.00636963 -0.00675423 ... 0.05511473 0.05969659

0.05345172]

[-0.01965249 -0.023396 -0.00866389 ... 0.07284279 0.07500508

0.06883204]]

[[-0.01156617 -0.02113016 -0.00548485 ... 0.05419793 0.04778644

0.05110366]

[-0.02832346 -0.03174063 -0.01932882 ... 0.06573011 0.06610017

0.06349793]]

[[ 0.02811115 0.0266958 0.03874318 ... 0.02791607 0.02725005

0.02655649]

[ 0.0029083 0.00052207 0.01313342 ... 0.04293735 0.0438478

0.03986251]]

...

[[ 0.00515197 0.02070466 0.01656615 ... 0.03297096 0.02571791

0.01930209]

[-0.03122183 -0.00693761 -0.00851945 ... 0.06377562 0.05581499

0.04924211]]

[[ 0.03813224 0.05521111 0.0518739 ... 0.00231059 -0.00506158

-0.00298203]

[-0.00403551 0.01962673 0.01965482 ... 0.01716055 0.00971772

0.00323346]]

[[ 0.02112037 0.02431872 0.02390222 ... -0.00273218 -0.00436366

-0.00462488]

[-0.00099824 0.00551138 0.00681528 ... 0.01127112 0.00951012

0.0075453 ]]], 'dimensionless')>

Coordinates:

* time (time) float64 31kB 0.0 0.128 0.256 0.384 ... 499.7 499.8 500.0

samples (time) int64 31kB 0 1 2 3 4 5 ... 3901 3902 3903 3904 3905 3906

* channel (channel) object 224B 'S1D1' 'S1D2' 'S1D3' ... 'S8D8' 'S8D16'

source (channel) object 224B 'S1' 'S1' 'S1' 'S1' ... 'S8' 'S8' 'S8'

detector (channel) object 224B 'D1' 'D2' 'D3' 'D9' ... 'D7' 'D8' 'D16'

* wavelength (wavelength) float64 16B 760.0 850.0

[4]:

display(timing)

| onset | duration | trial_type | value | channel | |

|---|---|---|---|---|---|

| 0 | 348.212306 | 0.185842 | spike | 1 | None |

| 1 | 113.418468 | 0.265394 | spike | 1 | None |

| 2 | 359.711462 | 0.226932 | spike | 1 | None |

| 3 | 490.350715 | 0.305449 | spike | 1 | None |

| 4 | 240.450561 | 0.217635 | spike | 1 | None |

| 5 | 171.578026 | 0.318715 | spike | 1 | None |

| 6 | 219.272088 | 0.117903 | spike | 1 | None |

| 7 | 199.009390 | 0.321399 | spike | 1 | None |

| 8 | 91.240025 | 0.152636 | spike | 1 | None |

| 9 | 265.758677 | 0.259548 | spike | 1 | None |

| 10 | 317.180178 | 0.354830 | spike | 1 | None |

| 11 | 362.204480 | 0.283307 | spike | 1 | None |

| 12 | 361.198573 | 0.196888 | spike | 1 | None |

| 13 | 180.882751 | 0.168479 | spike | 1 | None |

| 14 | 146.847624 | 0.289293 | spike | 1 | None |

| 15 | 46.049523 | 0.230110 | spike | 1 | None |

| 16 | 215.417594 | 0.248106 | spike | 1 | None |

| 17 | 212.901519 | 0.193678 | spike | 1 | None |

| 18 | 213.162010 | 0.368017 | spike | 1 | None |

| 19 | 472.049796 | 0.250551 | spike | 1 | None |

| 20 | 311.956509 | 0.134686 | spike | 1 | None |

| 21 | 200.000000 | 0.000000 | bl_shift | 1 | None |

| 22 | 400.000000 | 0.000000 | bl_shift | 1 | None |

Detecting Motion Artifacts and generating the MA mask

The example below shows how to check channels for motion artefacts using standard thresholds from Homer2/3. The output is a mask that can be handed to motion correction algorithms that require segments flagged as artefact.

[5]:

# we use Optical Density data for motion artifact detection

fnirs_data = rec["od"]

# define parameters for motion artifact detection. We follow the method from Homer2/3:

# "hmrR_MotionArtifactByChannel" and "hmrR_MotionArtifact".

t_motion = 0.5 * units.s # time window for motion artifact detection

t_mask = 1.0 * units.s # time window for masking motion artifacts

# (+- t_mask s before/after detected motion artifact)

stdev_thresh = 7.0 # threshold for std. deviation of the signal used to detect

# motion artifacts. Default is 50. We set it very low to find

# something in our good data for demonstration purposes.

amp_thresh = 5.0 # threshold for amplitude of the signal used to detect motion

# artifacts. Default is 5.

# to identify motion artifacts with these parameters we call the following function

ma_mask = quality.id_motion(fnirs_data, t_motion, t_mask, stdev_thresh, amp_thresh)

# it hands us a boolean mask (xarray) of the input dimension, where False indicates a

# motion artifact at a given time point:

ma_mask

[5]:

<xarray.DataArray (channel: 28, wavelength: 2, time: 3907)> Size: 219kB

array([[[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]],

[[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]],

[[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]],

...,

[[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]],

[[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]],

[[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]]],

shape=(28, 2, 3907))

Coordinates:

* time (time) float64 31kB 0.0 0.128 0.256 0.384 ... 499.7 499.8 500.0

samples (time) int64 31kB 0 1 2 3 4 5 ... 3901 3902 3903 3904 3905 3906

* channel (channel) object 224B 'S1D1' 'S1D2' 'S1D3' ... 'S8D8' 'S8D16'

source (channel) object 224B 'S1' 'S1' 'S1' 'S1' ... 'S8' 'S8' 'S8'

detector (channel) object 224B 'D1' 'D2' 'D3' 'D9' ... 'D7' 'D8' 'D16'

* wavelength (wavelength) float64 16B 760.0 850.0The output mask is quite detailed and still contains all original dimensions (e.g. single wavelengths) and allows us to combine it with a mask from another motion artifact detection method. This is the same approach as for the channel quality metrics above.

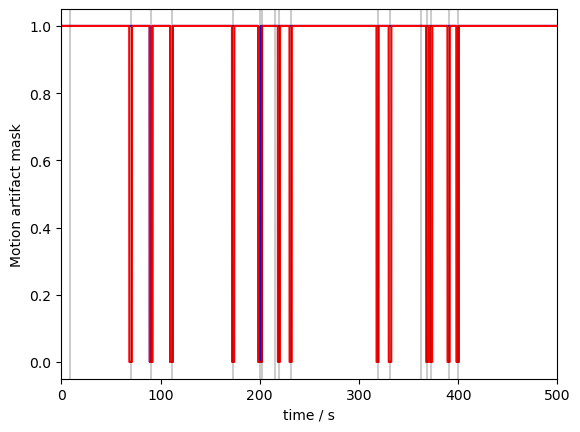

Let us now plot the result for an example channel. Note, that for both wavelengths a different number of artifacts was identified, which can sometimes happen:

[6]:

p.figure()

p.plot(ma_mask.time, ma_mask.sel(channel="S3D3", wavelength="760"), "b-")

p.plot(ma_mask.time, ma_mask.sel(channel="S3D3", wavelength="850"), "r-")

# indicate added artefacts

for _,row in timing.iterrows():

p.axvline(row["onset"], c="k", alpha=.2)

p.xlabel("time / s")

p.ylabel("Motion artifact mask")

p.show()

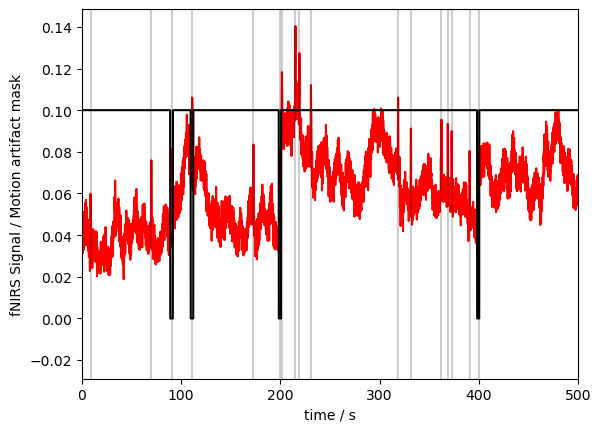

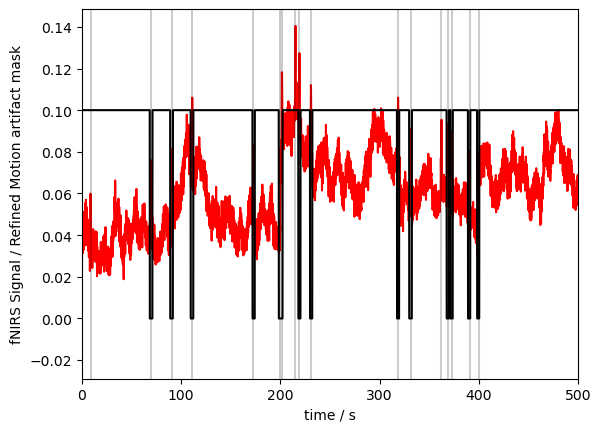

Plotting the mask and the data together (we have to rescale a bit to make both fit):

[7]:

p.figure(figsize=(12, 4))

p.plot(fnirs_data.time, fnirs_data.sel(channel="S3D3", wavelength="760"), "r-")

p.plot(ma_mask.time, ma_mask.sel(channel="S3D3", wavelength="760") / 10, "k-")

# indicate added artefacts

for _,row in timing.iterrows():

p.axvline(row["onset"], c="k", alpha=.2)

p.xlabel("time / s")

p.ylabel("fNIRS Signal / Motion artifact mask")

p.show()

Refining the MA Mask

At the latest when we want to correct motion artifacts, we usually do not need the level of granularity that the mask provides. For instance, we usually want to treat a detected motion artifact in either of both wavelengths or chromophores of one channel as a single artifact that gets flagged for both. We might also want to flag motion artifacts globally, i.e. mask time points for all channels even if only some of them show an artifact. This can easily be done by using the “id_motion_refine” function. The function also returns useful information about motion artifacts in each channel in “ma_info”

[8]:

# refine the motion artifact mask. This function collapses the mask along dimensions

# that are chosen by the "operator" argument. Here we use "by_channel", which will yield

# a mask for each channel by collapsing the masks along either the wavelength or

# concentration dimension.

ma_mask_refined, ma_info = quality.id_motion_refine(ma_mask, "by_channel")

# show the refined mask

ma_mask_refined

[8]:

<xarray.DataArray (channel: 28, time: 3907)> Size: 109kB

array([[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

...,

[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True],

[ True, True, True, ..., True, True, True]], shape=(28, 3907))

Coordinates:

* time (time) float64 31kB 0.0 0.128 0.256 0.384 ... 499.7 499.8 500.0

samples (time) int64 31kB 0 1 2 3 4 5 6 ... 3901 3902 3903 3904 3905 3906

* channel (channel) object 224B 'S1D1' 'S1D2' 'S1D3' ... 'S8D8' 'S8D16'

source (channel) object 224B 'S1' 'S1' 'S1' 'S1' ... 'S7' 'S8' 'S8' 'S8'

detector (channel) object 224B 'D1' 'D2' 'D3' 'D9' ... 'D7' 'D8' 'D16'Now the mask does not have the “wavelength” or “concentration” dimension anymore, and the masks of these dimensions are combined:

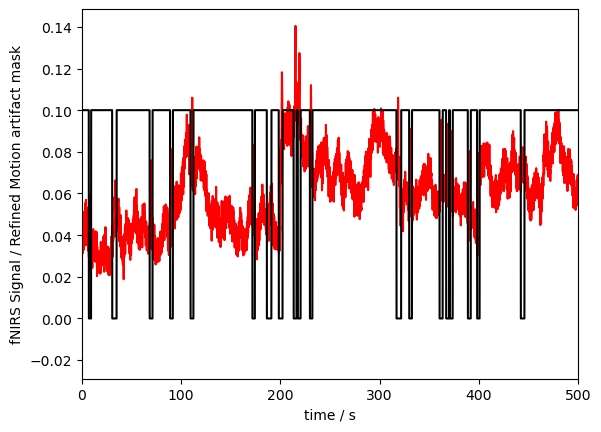

[9]:

# plot the figure

p.figure(figsize=(12, 4))

p.plot(fnirs_data.time, fnirs_data.sel(channel="S3D3", wavelength="760"), "r-")

p.plot(ma_mask_refined.time, ma_mask_refined.sel(channel="S3D3") / 10, "k-")

# indicate added artefacts

for _,row in timing.iterrows():

p.axvline(row["onset"], c="k", alpha=.2)

p.xlabel("time / s")

p.ylabel("fNIRS Signal / Refined Motion artifact mask")

p.show()

# show the information about the motion artifacts: we get a pandas dataframe telling us

# 1) for which channels artifacts were detected,

# 2) what is the fraction of time points that were marked as artifacts and

# 3) how many artifacts where detected

ma_info

[9]:

| channel | ma_fraction | ma_count | |

|---|---|---|---|

| 0 | S1D1 | 0.101612 | 18 |

| 1 | S1D2 | 0.059125 | 10 |

| 2 | S1D3 | 0.061172 | 11 |

| 3 | S1D9 | 0.059893 | 11 |

| 4 | S2D1 | 0.057077 | 10 |

| 5 | S2D3 | 0.056821 | 10 |

| 6 | S2D4 | 0.039672 | 7 |

| 7 | S2D10 | 0.091119 | 16 |

| 8 | S3D2 | 0.028411 | 6 |

| 9 | S3D3 | 0.061684 | 11 |

| 10 | S3D11 | 0.062964 | 11 |

| 11 | S4D3 | 0.009982 | 2 |

| 12 | S4D4 | 0.035065 | 5 |

| 13 | S4D12 | 0.038137 | 8 |

| 14 | S5D5 | 0.089327 | 17 |

| 15 | S5D6 | 0.061940 | 12 |

| 16 | S5D7 | 0.033786 | 7 |

| 17 | S5D13 | 0.113386 | 19 |

| 18 | S6D5 | 0.059381 | 11 |

| 19 | S6D7 | 0.037881 | 7 |

| 20 | S6D8 | 0.057077 | 10 |

| 21 | S6D14 | 0.047351 | 9 |

| 22 | S7D6 | 0.088047 | 16 |

| 23 | S7D7 | 0.070131 | 13 |

| 24 | S7D15 | 0.056053 | 11 |

| 25 | S8D7 | 0.024059 | 5 |

| 26 | S8D8 | 0.020476 | 4 |

| 27 | S8D16 | 0.048887 | 10 |

Now we look at the “all” operator, which will collapse the mask across all dimensions except time, leading to a single motion artifact mask

[10]:

# "all", yields a mask that flags an artifact at any given time if flagged for

# any channetransl, wavelength, chromophore, etc.

ma_mask_refined, ma_info = quality.id_motion_refine(ma_mask, 'all')

# show the refined mask

ma_mask_refined

[10]:

<xarray.DataArray (time: 3907)> Size: 4kB

array([ True, True, True, ..., True, True, True], shape=(3907,))

Coordinates:

* time (time) float64 31kB 0.0 0.128 0.256 0.384 ... 499.7 499.8 500.0

samples (time) int64 31kB 0 1 2 3 4 5 6 ... 3901 3902 3903 3904 3905 3906[11]:

# plot the figure

p.figure()

p.plot(fnirs_data.time, fnirs_data.sel(channel="S3D3", wavelength="760"), "r-")

p.plot(ma_mask_refined.time, ma_mask_refined/10, "k-")

p.xlabel("time / s")

p.ylabel("fNIRS Signal / Refined Motion artifact mask")

p.show()

# show the information about the motion artifacts: we get a pandas dataframe telling us

# 1) that the mask is for all channels

# 2) fraction of time points that were marked as artifacts for this mask across all

# channels

# 3) how many artifacts where detected in total

ma_info

[11]:

| channel | ma_fraction | ma_count | |

|---|---|---|---|

| 0 | all channels combined | [1.0, 0.9956488354235987] | [0, 1] |

Motion Correction

Here we illustrate effect of different motion correction methods. Cedalion might have more methods, so make sure to check the API documentation.

[12]:

def compare_raw_cleaned(rec, key_orig, key_raw, key_cleaned, title):

chwl = dict(channel="S3D3", wavelength="850")

f, ax = p.subplots(1, 1, figsize=(12, 4))

ax.plot(

rec[key_orig].time,

rec[key_orig].sel(**chwl),

"r-",

label=f"{chwl['channel']} {chwl['wavelength']}nm original",

alpha=.3

)

ax.plot(

rec[key_raw].time,

rec[key_raw].sel(**chwl),

"r-",

label=f"{chwl['channel']} {chwl['wavelength']}nm with artifacts",

)

ax.plot(

rec[key_cleaned].time,

rec[key_cleaned].sel(**chwl),

"g-",

label=f"{chwl['channel']} {chwl['wavelength']}nm cleaned",

)

ax.set_ylabel("OD")

ax.set_xlabel("time / s")

ax.set_title(title)

# indicate added artifacts

for _,row in timing.iterrows():

p.axvline(row["onset"], c="k", alpha=.2)

# indicate detected artifacts

bad_segments = quality.mask_to_segments(ma_mask_refined)

vbx.plot_segments(

ax, bad_segments, fmt={"facecolor": "y", "alpha": 0.1}, label="ma_mask_refined"

)

ax.legend()

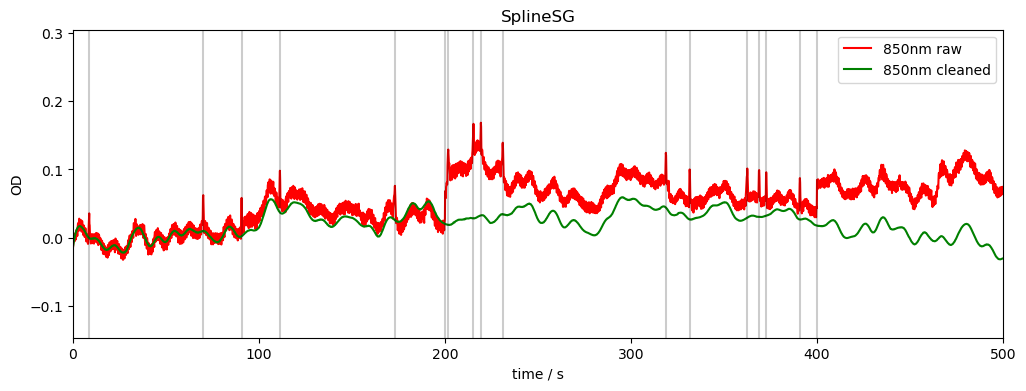

SplineSG method:

identifies baselineshifts in the data and uses spline interpolation to correct these shifts

uses a Savitzky-Golay filter to remove spikes

[13]:

frame_size = 10 * units.s

rec["od_spline_sg"] = motion.spline_sg(

rec["od"], frame_size=frame_size, p=1

)

compare_raw_cleaned(rec, "od_orig", "od", "od_spline_sg", "SplineSG")

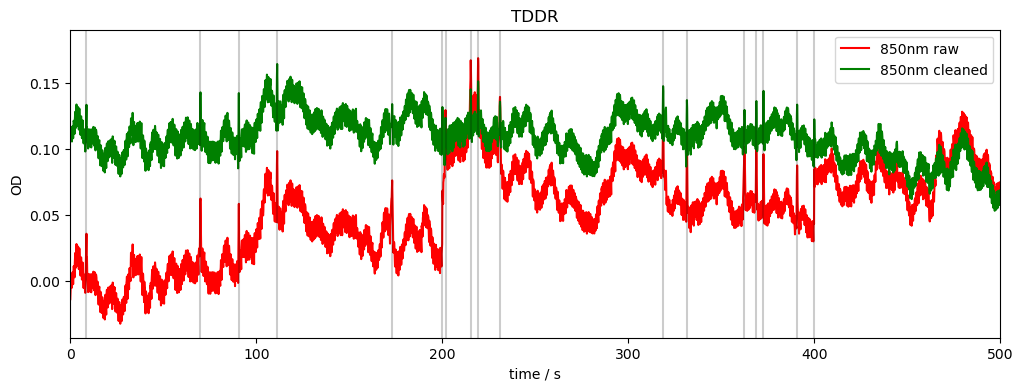

TDDR:

Temporal Derivative Distribution Repair (TDDR) is a robust regression based motion correction algorithm.

Doesn’t require any user-supplied parameters

See [FLVM19]

[14]:

rec["od_tddr"] = motion.tddr(rec["od"])

compare_raw_cleaned(rec, "od_orig", "od", "od_tddr", "TDDR")

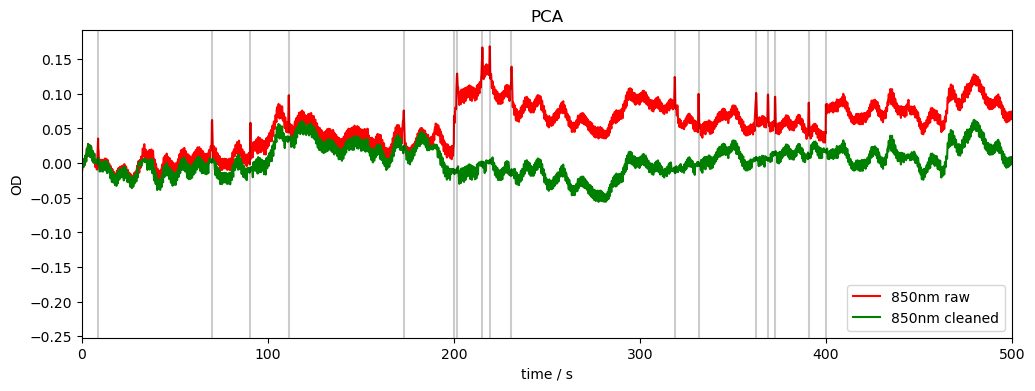

PCA

Apply motion correction using PCA filter on motion artefact segments (identified by mask).

Implementation is based on Homer3 v1.80.2 “hmrR_MotionCorrectPCA.m”

[15]:

rec["od_pca"], nSV_ret, svs = motion.pca(rec["od"], ma_mask_refined)

compare_raw_cleaned(rec, "od_orig", "od", "od_pca", "PCA")

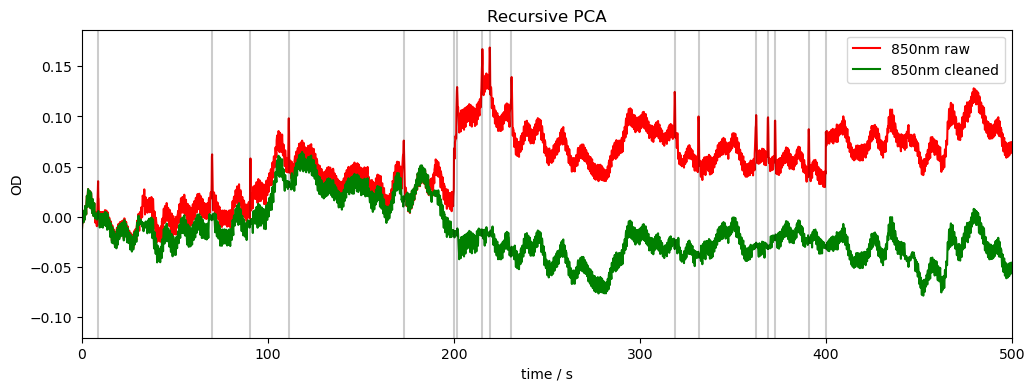

Recursive PCA

If any active channel exhibits signal change greater than STDEVthresh or AMPthresh, then that segment of data is marked as a motion artefact.

motion_correct_PCA is applied to all segments of data identified as a motion artefact.

This is called until maxIter is reached or there are no motion artefacts identified.

[16]:

rec["od_pca_r"], svs, n_sv, t_inc = motion.pca_recurse(

rec["od"], t_motion, t_mask, stdev_thresh, amp_thresh

)

compare_raw_cleaned(rec, "od_orig", "od", "od_pca_r", "Recursive PCA")

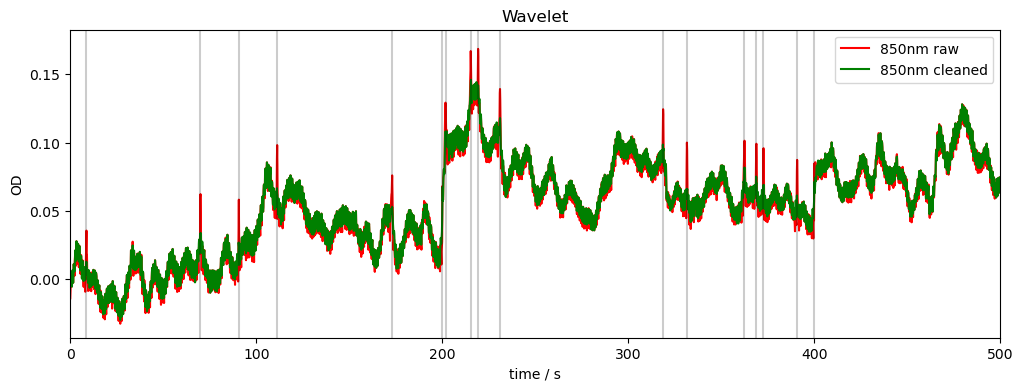

Wavelet Motion Correction

Focused on spike artifacts

Can set iqr factor, wavelet, and wavelet decomposition level.

Higher iqr factor leads to more coefficients being discarded, i.e. more drastic correction.

[17]:

rec["od_wavelet"] = motion.wavelet(rec["od"])

compare_raw_cleaned(rec, "od_orig", "od", "od_wavelet", "Wavelet")

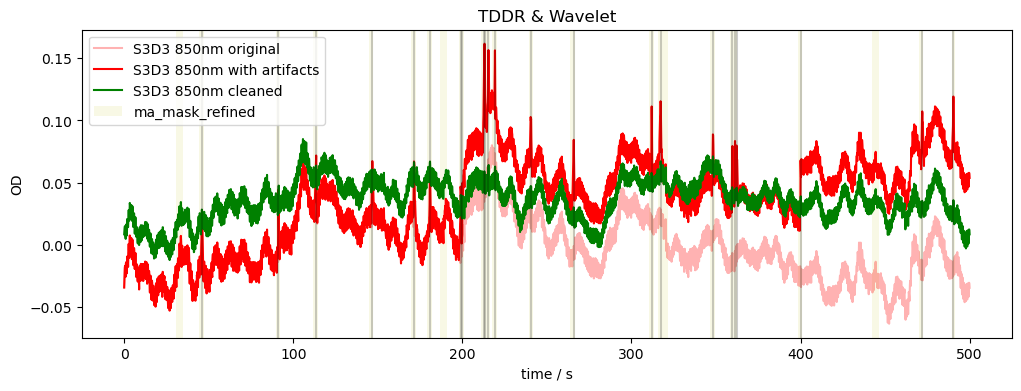

Combining TDDR and Wavelet Motion Correction

[18]:

rec["od_tddr_wavelet"] = motion.wavelet(rec["od_tddr"])

compare_raw_cleaned(rec, "od_orig", "od", "od_tddr_wavelet", "TDDR & Wavelet")